DEVELOP

1. Introduction to Resonance AI

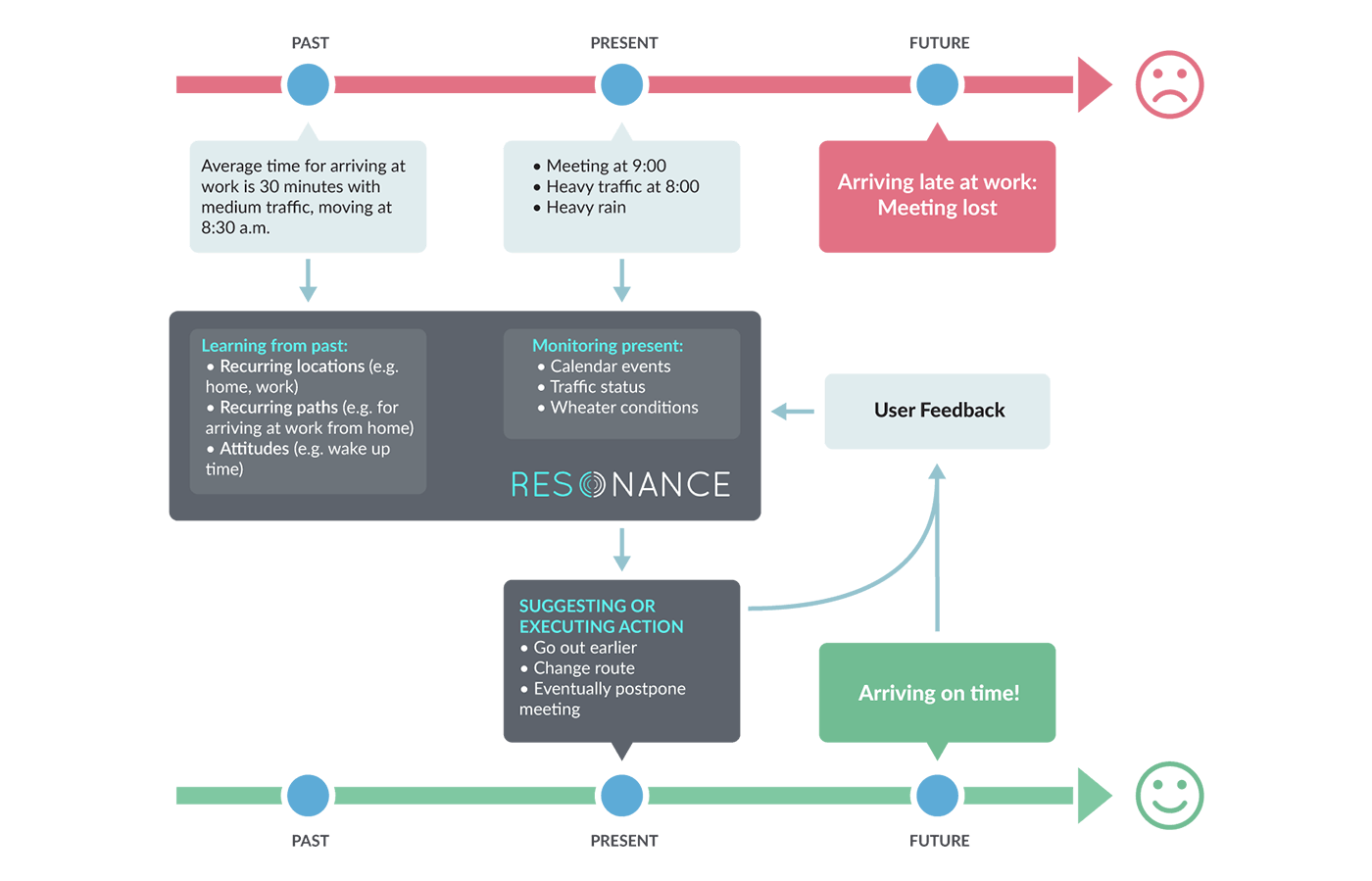

Resonance AI brings context awareness into personal cross-platform applications, while reducing the effort required to developers working on customer oriented applications and services.

The core idea is that user habits analysis and current user contexts monitorings, can be used to predict future needs and prevent unwanted scenarios.

Provided Features

Resonance AI provides the following functionalities, with high possibilities for customization through an advanced web console.

2.1. Context API

Context Detection is the core concept of Resonance AI. The information collected from various data sources (e.g. Android / iOS devices, wearables, external systems), can be processed (through advanced models developed for Google Tensorflow) and combined in order to determine what the user is doing in a certain moment.

Here below are listed some examples of the contexts that Resonance AI recognizes:

- Home

- Work

- Bicycle Workout

- Cinema

- Restaurant

- Business Trip by Train

Resonance AI implements two approaches to Context Detection:

- Post Processing

- Real Time Processing

How the real time processing is exploited is described more in depth in the Android / iOS sections.

2.2. Info API

Resonance AI implements a set of functions which allow to acquire specific information about the users and their attitudes.

For example, here below are reported some questions that Resonance AI is able to answer:

- Which are user Home / Work locations?

- Where user parked his car?

- The user is driving. Which is the most probable destination?

- Which are user’s favorite restaurants?

One of the most important things to highlight, is that the information comes directly from the data analysis on the backend and it doesn’t require user direct input (in case, user’s feedbacks can be used to adjust results).

More details on how to exploit Info API are provided in the Android / iOS / Web sections.

2.3. Rule Engine

Resonance AI provides proprietary APIs that allows developers to listen to context changes (as well as context evolution) and to use them to trigger actions. This can be ensured either using a Low Level API (discussed more in depth in the Android / iOS sections) or Resonance Distributed Rule Engine.

The Rule Engine is a subsystem within Resonance AI that allows developers to activate and execute IF-DO smart actions (or rules) when users enter in (or exit from) a specific context.

Rules execution is distributed among the devices and the backend. This is essential in order to make them available even in strict environments like iOS.

Resonance AI web console uses a simple editor to allow rules definition and contexts assignments. Moreover, thanks to user behavior analysis, Resonance suggests which rules are more suited to be implemented.

3 Getting Started

This section provides a step-by-step guide to create a Resonance AI empowered application from scratch.

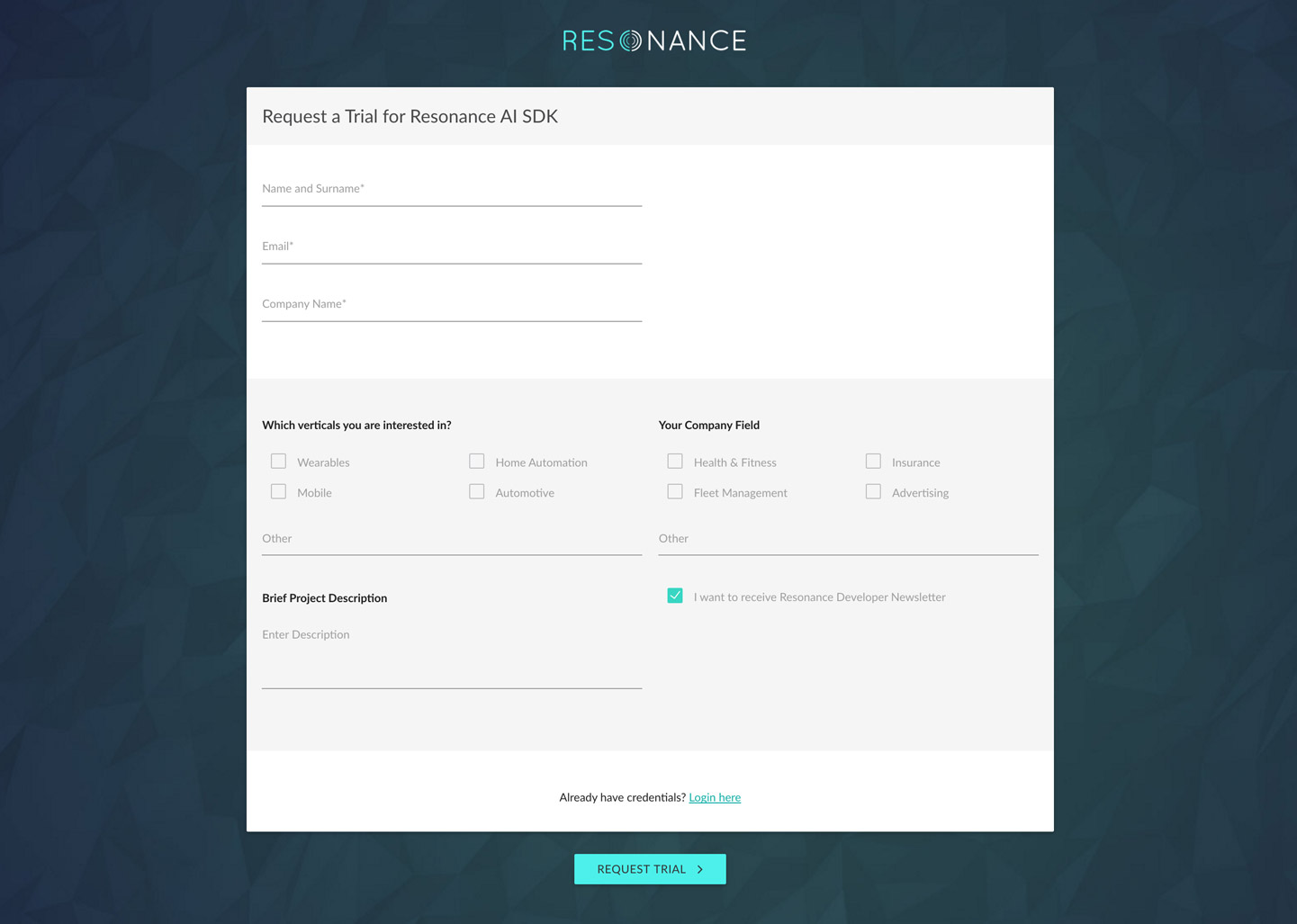

At the first step, you need to request a trial and fulfill the form (example below). Please, try to be as more detailed as possible. Our team will contact you back as soon as possible with a private link to access our dashboard.

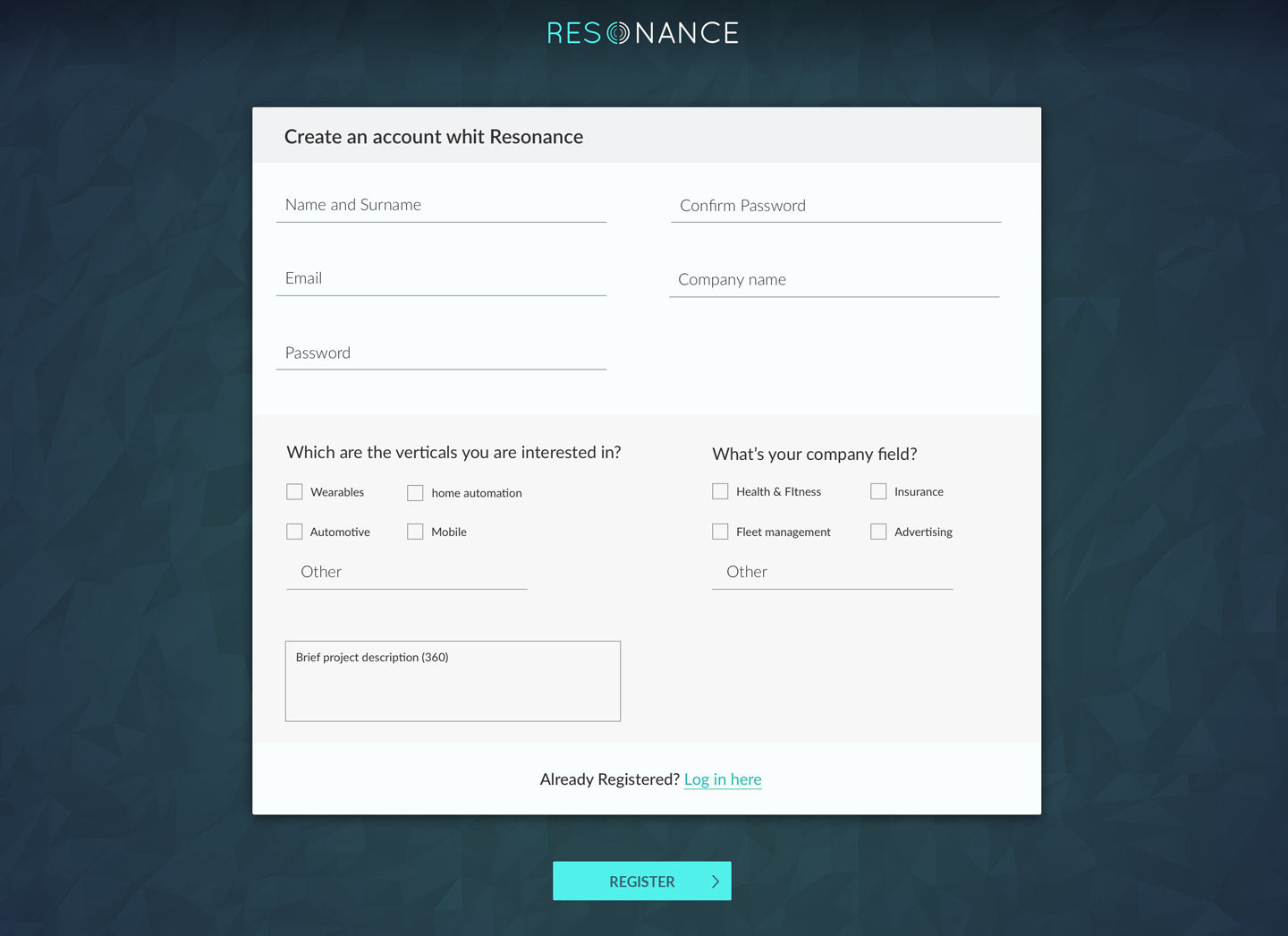

Please, follow the private link and complete the form in order to create an account, as reported in screenshot below:

Once your account is activated, you can login into the web console. Press the New Project button and launch the creation wizard. The creation process follows these 3 main steps:

- Declaring the features of interest - This is a mandatory step and obviously depends on the project itself.

- Configuring data sources - According to the choices made at step 1, Resonance Platform automatically selects a set of data sources. The developer can change such configuration (typically by removing wearables or other external systems that are not of personal interest), but with some restrictions. Too many changes may impact the effectiveness of the selected features .

- Configuring rule engine [ optional ] - The first two steps allow Resonance Context API and Info API exploitation. In this phase it is possible to declare if the project will link IF-DO smart actions with specific contexts.

Next sections describe more in depth each of the steps above.

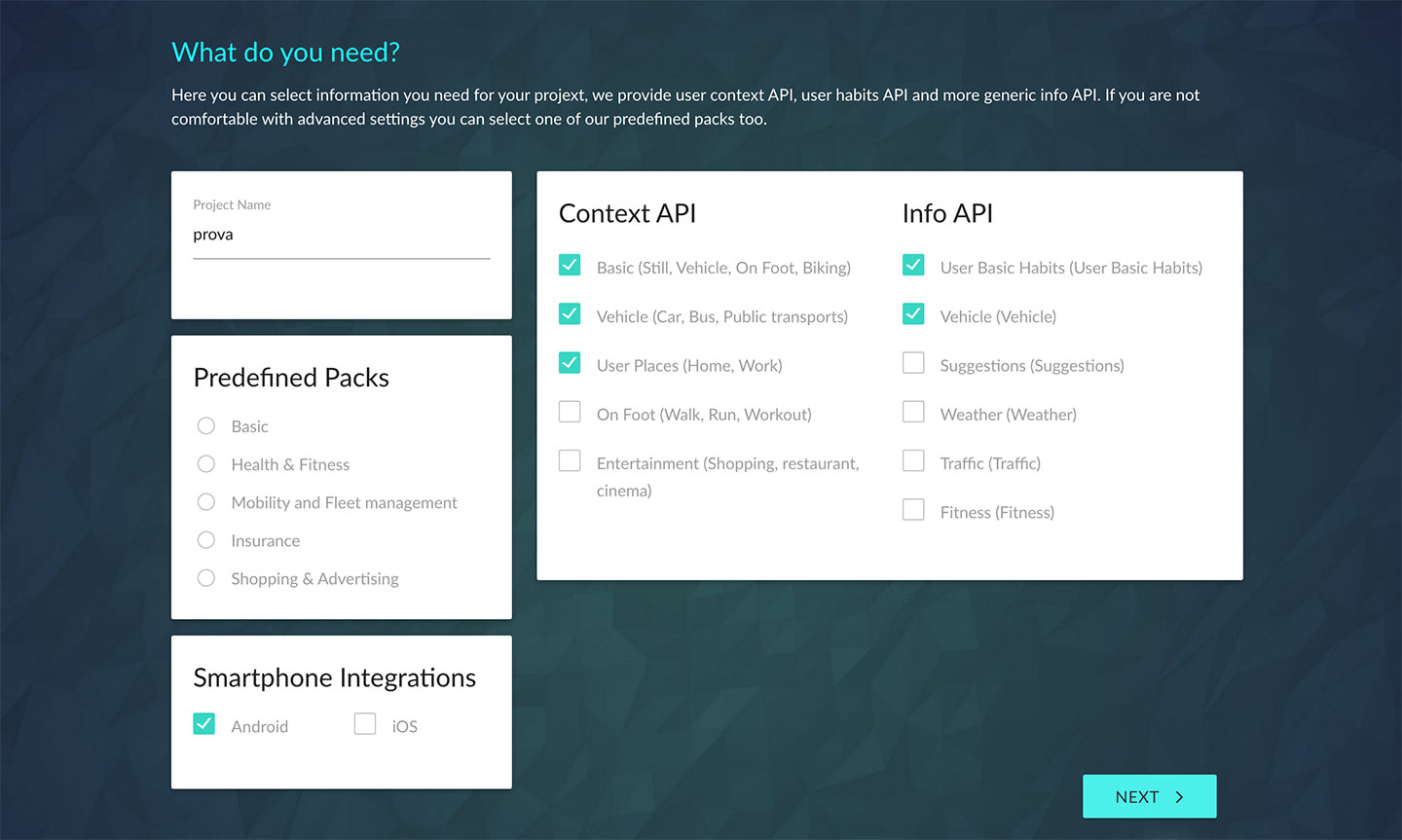

3.1. Declaring Features of Interest

The Screenshot below shows how the features of interest can be declared. In order to simplify this process, resonance provides a set of Predefined Packs. Each pack includes a subset of Context API and Info API functions.

More advanced users can ignore the Predefined Packs panel and select the functions of interest directly.

Besides functions, developers must also target the mobile platform of his/her interest. This is extremely important because mobile devices are not only used for running applications but they are also utilized for data collection. Moreover, some functions relies massively on the type of mobile platform data; so the absence of this data may impact their effectiveness (e.g. location based functionalities rely on GPS data).

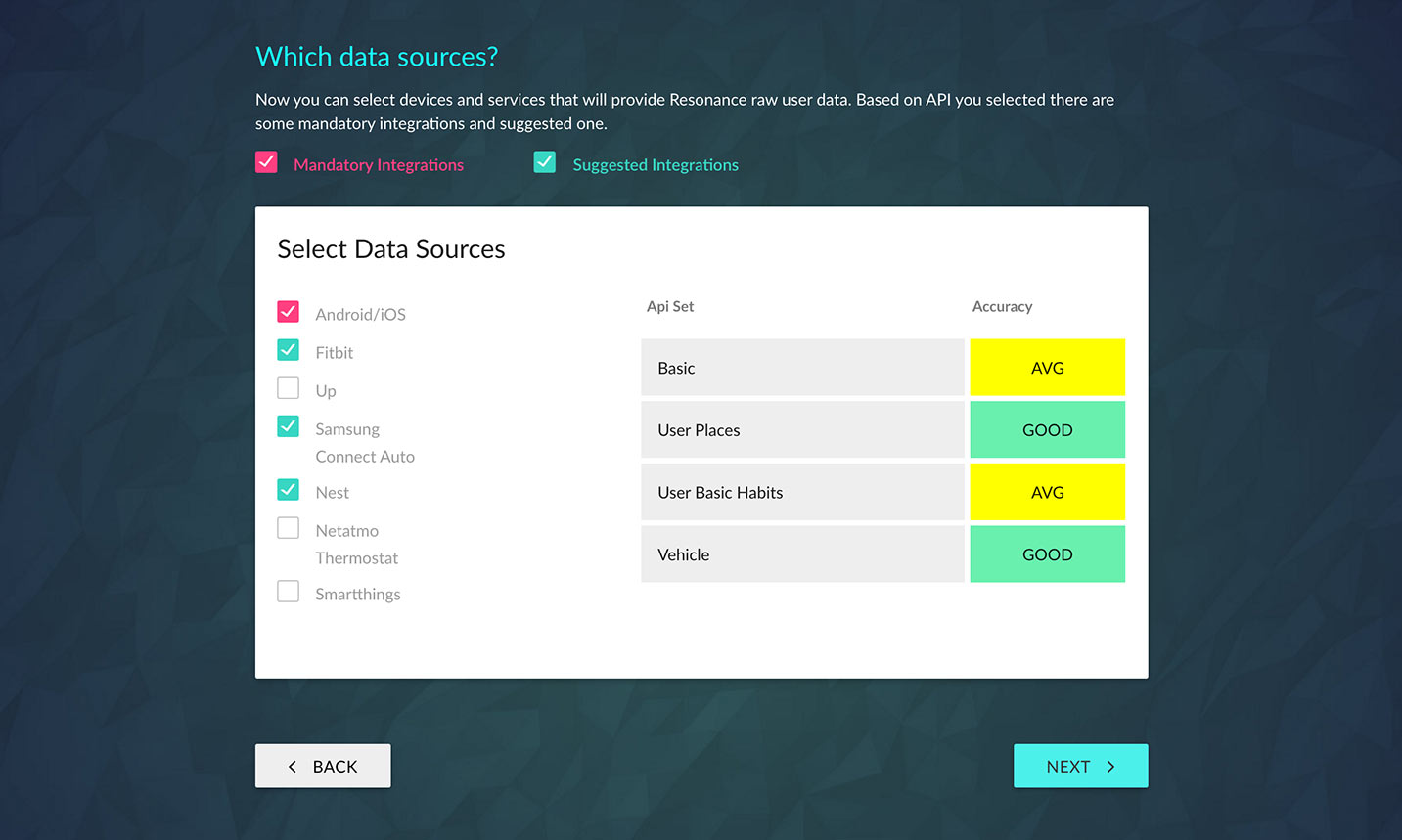

3.2. Data Sources Definition

Usually the process involved to define the data sources is a complex activity. Resonance platform simplifies it because it provides a pre-selection of devices and external systems that can effectively contribute to implement the functionalities declared during the previous steps.

Please, consider that the developer can decide to remove some data sources, but some of them remain essential to implement some of the functionalities of interest; for this reason they can not be excluded.

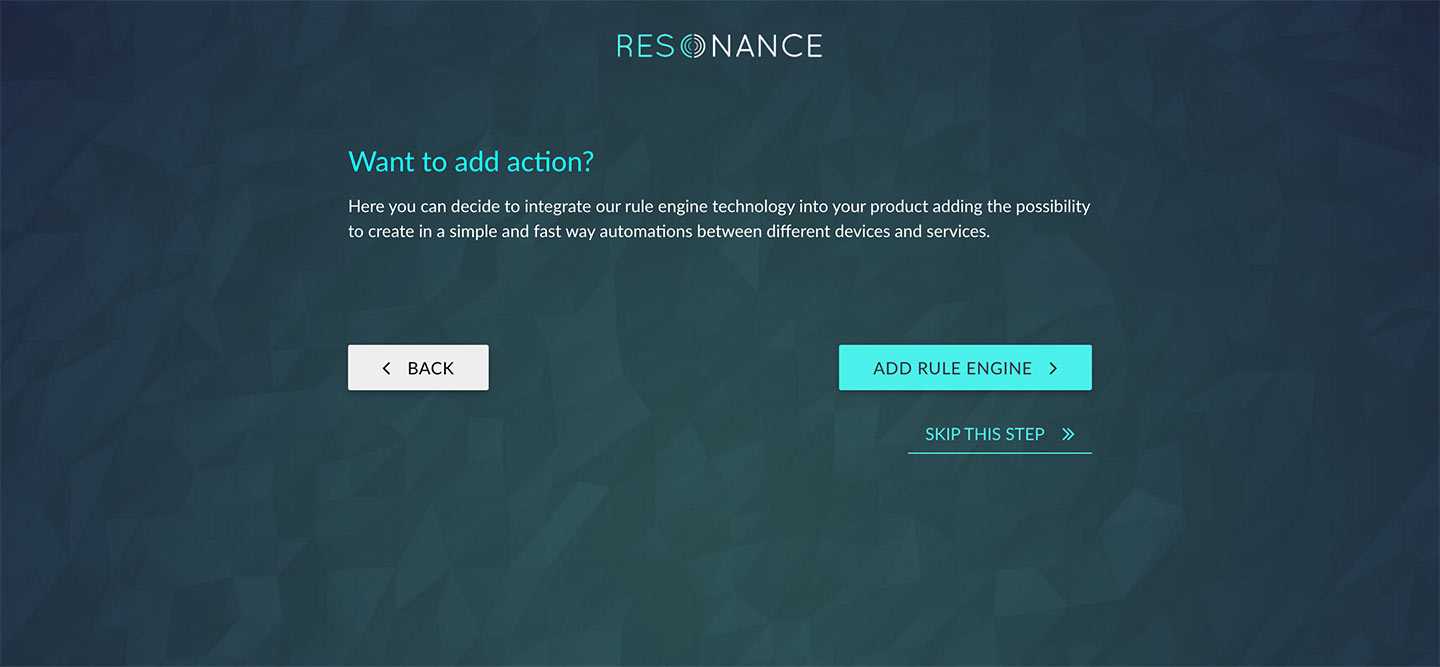

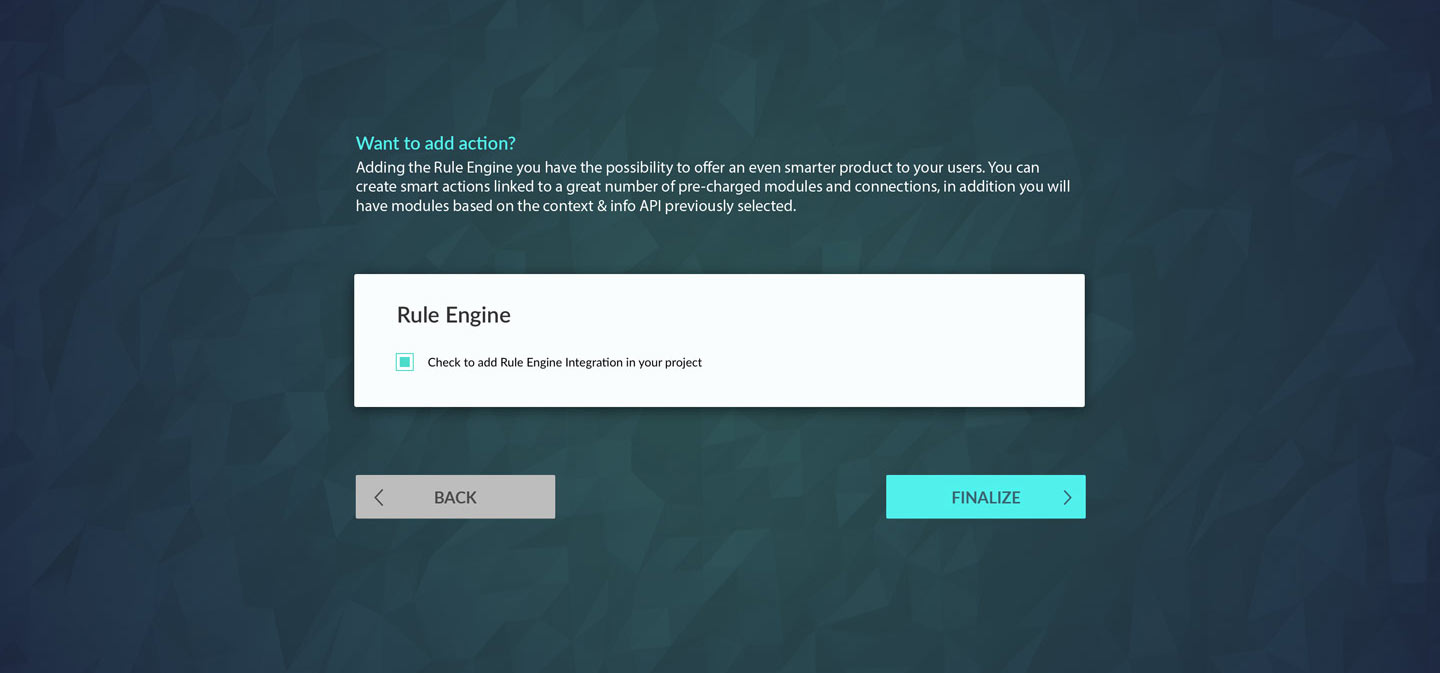

3.3. Rule Engine Configuration

Once the developer has selected the functionalities and the data sources he/she is asked to decide whether to enable Rule Engine (this can be done at any time, later on).

When adding the Rule Engine, Resonance will automatically select all the modules related to the devices and the systems involved in the data-sources-definition step.

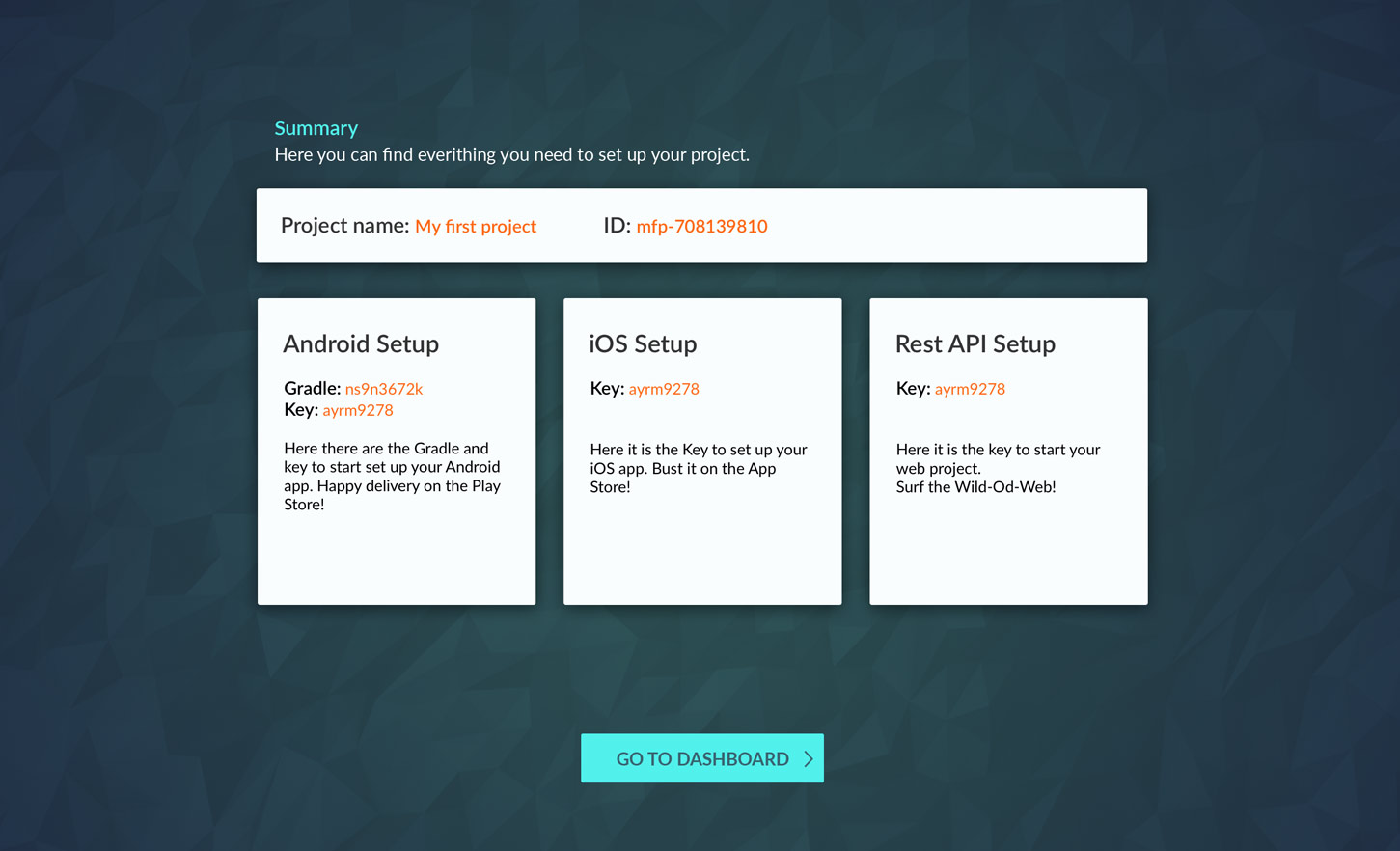

Once the configuration is completed, the console will summarize all the detailed settings and instructions to configure your mobile and web applications and exploit all the features selected.